How the New, High-End iMac Pro Utilizes Those 18 Cores

The new iMac Pro supports up to 18 cores. Just how can modern apps exploit all that power?

The high end iMac Pro is computational monster. Apple.

The key to understanding how this new iMac Pro from Apple can be so powerful lies in a OS feature called Grand Central Dispatch (GCD). GCD was developed by Apple and first released with Mac OS X 10.6, Snow Leopard, in 2009. It’s also available for iOS.

Grand Central Dispatch is an OS technology that allows the app developer to more easily manage multiple computational processes, called threads. It does so by queueing the requests for threads but the developer still has to orchestrate the threads in a safe way, called thread safety. But with GCD, the developer no longer has to attend to detailed thread management.

iMac Pro Xeons

The iMac Pro, in its maximum configuration of 18 Xeon cores can theoretically execute 36 threads. That’s because the Intel Xeon W, like many of its brethren in the Core family, is capable of what Intel calls “hyper-threading.” From Wikipedia:

For each processor core that is physically present, the operating system addresses two virtual (logical) cores and shares the workload between them when possible. The main function of hyper-threading is to increase the number of independent instructions in the pipeline…

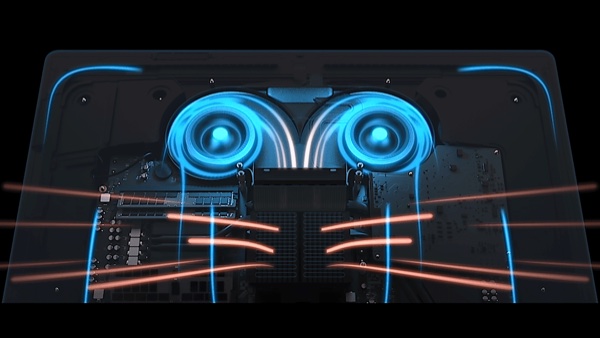

iMac Pro cooling scheme. It’s gonna need it. Image credit: Apple

Extracting That Hardware Capability

I asked several notable developers how all this works. Namely, can apps gracefully expand their use of threads, when needed, up to the limits of the hardware? The short answer is “yes!” And when should OpenCL become a consideration?

Mike Bombich (Bombich Software) launched the discussion amongst a small group I queried.

We use good design patterns to take advantage of how ever many cores are available, i.e., Carbon Copy Cloner (CCC) is a 64-bit app that leverages Grand Central Dispatch for queue management rather than managing a hard-coded number of threads. Most developers don’t know how many cores the end user’s Mac will have, and they shouldn’t have to care. macOS gives us tools that take advantage of those resources, we just have to write our software such that we’re chunking the work into pieces that can be distributed by GCD.

That extends to filesystem requests as well, although performance there is usually more dependent on the performance of the underlying hardware rather than on the CPU. But even there, the design pattern is the same – the developer defines their work and places it onto GCD queues, and then macOS coordinates the scheduling of that work onto processor cores.

Jordan Hubbard, who led the early work at Apple to integrate BSD UNIX into Mac OS X, provided some additional clarification.

Assuming that the applications in question use either Grand Central Dispatch queues or another mechanism which exploits the same underlying thread pool model, like NSOperation / NSOperationQueue, no application changes are required as the number of hardware cores change.

The kernel interface (pthread_workqueue) automatically spins up ‘the right number of threads’ to take maximum advantage of the underlying hardware capabilities without creating so many that thread contention occurs and application performance is actually pessimized. Similarly, the application does not (should not) decide how many threads to create manually for the same reason (it would not know how many/few to create), which is why the explicit use of the NSThread / pthread() APIs is discouraged.

Greg Scown (Smile Software) then pointed out a detail regarding the user interface (UI) to an app.

Jordan’s got it covered for computational operations which do not involve the user interface. I would simply add that most operations which involve the UI must run on the main thread. There is only one main thread, and so a UI-intensive application will benefit less—or not at all—from additional cores versus an application which primarily does computation.

That reminded Jordan Hubbard of something…

This is also true, though Greg raises a tangental point I had not thought to comment on before: Much of macOS/iOS is also now highly ‘service oriented,’ largely for security (sandboxing) vs performance reasons, and because those services are also running in different process contexts, one might reasonably posit that the entire ecosystem of running services around an application would be faster and more responsive as the number of hardware cores increase, even if said application was highly ‘UI-centric’ and running on a main thread.

I think it’s also reasonable to say that pretty much no UI app can do anything truly useful on macOS or iOS anymore without talking to one or more services via explicit or implicit IPC unless said app is running entirely un-sandboxed (on macOS) and needs no access to contacts, location data, or anything else with encapsulation/privacy rules governing access.

OpenCL/GPU vs CPU

I had one final question about the trade-off when it comes to parallel processing with OpenCL and the GPU compared to computational threads in the CPU. I asked an OpenCL expert, Dr. Gaurav Khanna (University of Massachusetts), about that. He wrote:

On the GPU side, the two places where GPUs win big is raw compute (flops) and memory bandwidth. As a comparison, the best GPUs out there can deliver 5 to 10 teraflops on a single card and about 0.5 — 1.0 TB/s memory bandwidth. The best Xeons out there are still just under a single teraflops in compute and about 100 GB/s bandwidth. That’s a big difference.

Having said that, I shouldn’t omit mentioning some of the caveats and there are many. Not all algorithms scale on the thousands of GPU cores and perform well on their very simple cores. There is also the PCIe bottleneck on most cards (although some of that is being resolved in APU, SoC, NVLink technologies).

The bottom line is that app developers need to take a hard look at whether they are using the system Apple is designing optimally. And that is certainly not a trivial task!

Summary

So there you have it. A grand tour of the computational features of a powerful Mac like the iMac Pro. Apple has supplied all the tools a developer would need to exploit the power of a Mac like this, or any Mac. Or even an iPad. But it’s still up to the developer to understand all the technical aspects of the machine, computation, threading, I/O, memory bandwidth, etc. to extract the best performance from any application.

0 Response to "How the New, High-End iMac Pro Utilizes Those 18 Cores"

Post a Comment